Gina Pingitore and Dan Seldin

Research using mobile devices is new, different and growing. How does it impact response rates and behaviour?

Advances in telecommunications are changing the landscape of market research. Current mobile phone penetration is estimated at more than 80% globally, compared to 25% for internet access, 19% for fixed phone lines and 12% for personal computers.

While the rate of increase among consumers accessing the internet via mobile devices is rapidly increasing, the growth rate of fixed-line coverage is declining. These trends make it all the more important for market and social science researchers to better understand how to blend, leverage, and maximise the quality of data collected across different communications platforms.

As a leading global research firm, J.D. Power and Associates explored how to best take advantage of the rise in mobile devices for collecting data. The following are the five most important findings we gleaned from our analysis of data collected using mobile devices, compared to traditional online methods.

1. Mobile respondents are different

We researchers need to understand the extent to which the sample frame and sample source provide wide coverage of the target population. As respondents in most countries must first opt in to be contacted via online or mobile platforms, we wanted to know if respondents who opt in for contact via mobile devices are similar to those who opt in for traditional online contact.

The results of a 2011 survey of over 600,000 US members from a major panel provider suggest there are keydifferences: only 17% of online opt-in panelists indicated that they would be extremely likely to opt in for mobile surveys, whereas 30% indicated they would not be at all likely to opt in.

To assess the sample frame coverage further, we compared the demographic profile of panelists who opted in for mobile contact to online panelists across different panel providers. While opt-in rates and overall sample volumes differed by sample provider, we found that mobile opt-ins tended to be younger, more likely to be employed full-time, and have higher incomes. Furthermore, some providers were able to recruit African-American and Hispanic-American consumers for mobile contact at higher rates than for online.

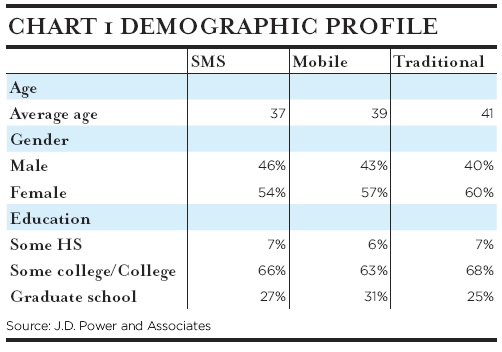

Finally, we examined the profiles of respondents who completed three different surveys: mobile web, SMS and traditional online. Results from two studies conducted in the US between November 2010 and January 2011 showed that SMS respondents skew the youngest, and mobile web survey respondents had a higher level of education compared to online respondents.

2. Mobile web response rates are lower

Are response rates among consumers who respond to a survey via a mobile device different from those of consumers who respond to an online survey via their personal computer?

To answer this question we collaborated with one of our international panel providers and randomly assigned about 4,300 opt-in panelists to one of three data collection methods, asking them all to complete the same seven-item survey regarding their experience with their handset.

Group One was assigned to the traditional online method. An outbound email with a survey link was sent to panelists.

Group Two was assigned to a mobile web method. An outbound SMS message with a link to a mobile web survey was sent to panelists.

Group Three was assigned to an SMS method. Panelists were instructed to send a text message containing a key word to initiate the survey.

Survey response rates varied widely across the three methods, with mobile web yielding the lowest response rate at 39%, compared to traditional online at 45% and SMS at 60%.

To check if response rates would be similar using a different topic and sample provider, we executed the same research design using a different sample provider and topic. This time about 10,000 panelists were randomly assigned to complete a 16-item survey about their experiences with their credit card.

Again, mobile web yielded the lowest response rate at 32%. The response rate for SMS was similar to that of the first test at 56%, while the traditional online rate was considerably higher at 78%, possibly the result of a more targeted recruitment strategy.

The lower response rate for mobile web could be due to slow mobile internet connectivity and the smaller screen size of most handsets. As advances in both network quality and device functionality improve, it is expected that the mobile web response rate will increase.

Another factor that can impact response rates is cost. In many counties, including the US and Canada, the receiving party pays for mobile calls. This means that respondents could incur an expense. While many consumers are enrolled in unlimited data and text plans, these services can be expensive and this fact may impact response rates. Under these circumstances, researchers must consider additional forms of reimbursement or other incentives to comply with industry codes such as ESOMAR’s, and to achieve higher participation rates.

3. Mobile surveys yield similar results

Since consumers often multitask when using mobile devices, they may be less attentive while answering survey questions via this platform. This might cause them to be more “satisficing” in their responses. To examine the comparability of results gathered from different communications platforms, we analysed the results from both the credit card and handset studies, focusing on measures such as straight-lining, top box, average standard deviation and overall scores.

The results showed slightly lower percentages of straight-lining among both mobile web and SMS respondents compared to traditional online. This is possibly due to the fact that the questions were presented to respondents one by one in a sequential phase, rather than in a block (as in the online version). We also examined response variation to assess satisficing in survey responses. In comparing mobile to traditional online survey respondents, we found that both mobile web and SMS respondents used slightly more scale points than traditional online respondents, which yielded higher standard deviations. These two findings indicate that mobile data collection does not increase the likelihood of respondents’ satisficing in their survey answers.

The satisfaction ratings yielded additional interesting findings. In the credit card study, mobile respondents gave lower scores on all rating items compared to traditional online respondents. This cannot be explained by demographics or brand mix. In contrast, results from the handset study showed the opposite effect: mobile respondents’ scores were notably higher than those of traditional online respondents, even after controlling for demographics and the type and manufacturer of the handset. It is possible that this is because respondents enjoyed talking about their handset on their handset.

Overall, the data shows that there is less straight-lining and more variation using mobile devices than for traditional online; however, ratings may differ slightly between these methods. Researchers should examine these and other measures to ensure comparability of results before blending results from different data collection sources. However, these differences should not discourage leveraging and blending the results of mobile data collection with those gathered from traditional online.

4. Survey length matters

Survey length is a key issue for any data collection method. Many of us have created or used data from online surveys with 100 or more items. While such long surveys are burdensome for respondents, it’s still possible to achieve good response rates and retrieve high-quality data from them when they are well designed and appropriate incentives are included. But would any respondent consider completing a 100-item survey by SMS or mobile web?

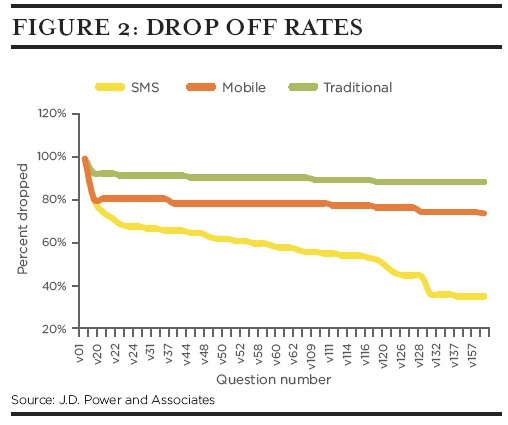

To explore this question, we again collaborated with an international panel provider and invited nearly 4,400 opt-in panelists to complete a survey via SMS or mobile web. We administered a 160-item survey and used “survival analysis” statistical techniques to compare the item drop-out point of the respondents to that of traditional online respondents. The drop-out rate among SMS respondents rose dramatically after 20 items: about 80% of SMS respondents completed 19 or fewer items and 75% completed 20 items. Thereafter, the completion rate fell by 1-2% for each additional question. Drop-out rates were lower for mobile web respondents and in line with those of traditional online respondents. This indicates that once respondents are engaged, they are likely to complete the survey even if it is lengthy.

5. Location-aware mobile devices are the future

Location-aware (also called location-enabled) mobile devices make it possible to track and even analyse survey responses based on respondents’ exact location when taking the survey.

This will enable researchers to better understand where respondents are most likely to take a survey – at home, at work, or in the gym – and to determine whether responses differ based on the location of the respondent. Researchers should then be able to tailor their surveys based on respondents’ location and data usage habits in the near future.

With new mobile opportunities come new challenges, not least of which are the privacy issues that arise with location-aware devices. Should personally identifiable information be attached to the location information? Can it be stored? Should respondents be given options to preserve a degree of anonymity? How long should the data be kept, who should have access to it and in what ways?

Gina Pingitore is chief research officer and Dan Seldin is director of corporate research at J.D. Power and Associates. Thanks are due to Kevin Frazier and Pete Van Mondfrans of SSI (formerly Opinionlogy) for their research support.

2 comments

Thanks for sharing the website. Actually I am gathering information about mobile data. Because I decided to make a mobile comparison website. It’s not easy to build a website so I want to get help from any website which offers handsome commission.

I checked many websites but I didn’t get any best website except one website which provides affiliate data feeds but I want suggestion about highest commission provider website.

I don’t want to work under any affiliate program because they waste more time and give low commission.

Question: Research using mobile devices is new, different and growing. How does it impact response rates and behaviour?

Yes – mobile respondents are different, but how are they different? How representative are those customers who respond via mobile of your current customer base and how representative are they of your desired future customer base? I have observed that a multi-mode data collection strategy not only allows customers to offer feedback in a way they prefer, but also the data collection method becomes a way of gaining access to premium customers. When you assess new and multi-mode data collection strategies, it is critical to align the data collection methods with the targeted customer base to develop relevant and unique marketing strategies. As technology enables different and new ways of inviting customer feedback, we need to keep in mind that all customers and research are not created equal. Two professional that I respect and value, Christopher Frank and Paul Magnone, expressed in their book, Drinking from the Firehose, “Data is a means to an end. It is the supporting character. Too often it takes the center stage.” I agree. In fact, I suggest this may apply to method too. Method is a means to an end – to provide strategic confidence and an ability to address those questions that are most critical for the future survival and success of your firm.

Ms. Pingitore and Mr. Seldin, thank you for a timely and insightful review of mobile data collection.

Best regards,

Yvette Wikstrom, VP Advocacy Marketing, Market Probe