Mitch Eggers and Jon Puleston

Key learnings about what counts.

The development of online research technology has made international multi-market research one of the biggest growth areas in the industry over the last decade. This growth has produced a demand for more accountability and consistency in the data from international studies.

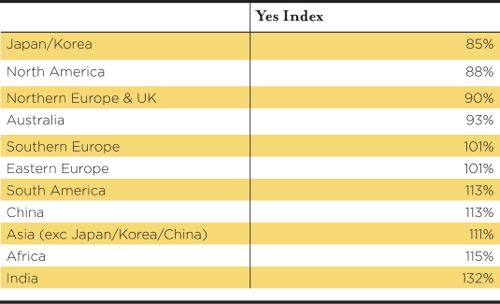

Anyone who has dipped a toe into cross-market research will know that there is a heady mix of dependent variables to contend with in cross-comparing feedback from one country to another. Every country and culture has its own ways of filling in surveys. A person in India is 90% more likely to say they like something rather than dislike something than someone in Japan, for example.

How panels are recruited and maintained in different countries varies dramatically, and their demographic profiles also differ. There are wide divides in the underlying economics and motivations to do a survey from one country to another, and these can encourage fraudulent behaviour like lying on the screening questions to have a chance to do the survey. A one dollar incentive to complete a survey in China, for example, may have 10 to 20 times the perceived value of that incentive in the USA.

How people comprehend and interpret surveys that have been written in another language by someone from a different culture presents a minefield of issues. In the US, for example, people are very comfortable about saying they ‘love’ a brand, whereas Australians prefer to say a brand is ‘OK.’ And that is even before you factor in translation. The concept of ‘cool’ in American English could easily be literally – and disastrously – translated to “cold” in another language. We often find word interpretation variance can be larger than the base-line selection rates of any particular word in a multi-choice word selection list, for example.

There are also survey-design factors to consider – some countries are much less tolerant of completing long, dull surveys. Survey gamification, an area we specialise in, opens a whole new can of worms. What may motivate someone to answer a question in one country may not tick the boxes of someone in another country.

What is important?

We conducted a major research study to investigate the relative influence of all these factors – cultural, panel and survey-design effects. We aimed to find out what we should really be worrying about when conducting international research and to better understand the relative levels of variance these different factors introduce into the data.

The study was composed of over 20 mini-research experiments conducted across 15 countries with over 11,000 respondents. We tested a variety of different types of question formats and types of panels. We also examined data from over 20 international studies conducted on our panel by various clients to understand the underlying trends in how respondents in different countries answer questions.

Here are some headline learnings from this research.

1. Cross-cultural factors are clearly the most dominant cause of data variance.

The table below compares the preponderance to say “yes” to any question in different countries:

2. Beware of untruthfulnessApplying score weighting to account for cultural differences can help to smooth out underlying differences, but these have to be carefully calibrated.

Whatever reason people have for not telling the complete truth in a survey, it is an endemic issue in certain countries and for certain types of questions. We have a simple lie-detection test that can be used within a survey to gauge how truthful respondents are being, from completely fraudulent to low level exaggeration.

From these experiments, we could see that more than 40% of respondents were showing signs of untruthfulness in certain questions in certain countries.

Ownership of an iPad is an example of a vulnerable type of question, with up to 100% over-claiming amongst untruthful respondents. We believe that employing an adequate lie-detection test is a critical component of an international study for any project with an incident rate falling below 20%.

This does, however, have ramifications on panel sourcing costs.

3. Speeding is a real hidden problem

Speeding is often brushed under the carpet or dealt with inadequately by screening out people who speed through the whole survey. The problem is that nearly everyone speeds at some stage whilst completing a survey (in our experiments it was measured to be around 85% of respondents).

What respondents do is pick and choose when to do it. As people get more bored it gets worse. At the end of the survey, we found it to be the single largest source of data variance of all the factors we measured.

4. Good question design is the main weapon for tackling speeding effects

In every country and with nearly every question tested, adopting a more creative and engaging approach to the design of questions proved to reduce speeding effects and the data variance caused by it.

Imagery proved to be far more consistently interpreted than words across different countries. Survey gamification also seemed to work as a powerful motivator everywhere. The evidence shows that more engaging question techniques deliver more consistent data across markets.

There were exceptions. The choice of imagery could, on occasion, dictate responses, and certain creative techniques like card sorting and sliders deliver measurably different responses compared to traditional questions. The wording of questions could sometimes steer answers off course.

5. Panel variance on the same scale as demographic variance.

Switching panels can cause the same level of data variance you might expect from, say, switching from an all-female to an all-male panel. It is very important to calibrate for panel-variance effects. Mixed panels provided more consistent cross-market data than single-source panels.

So what is the main thing you should be worrying about?

This advice may not be of much help, but – everything.

Ensuring a balanced demographic panel, screening for liars in low-incidence studies, weighting for cultural differences, ensuring in the design of your survey that you don’t encourage speeding, and making sure surveys are well translated are all equally important considerations.

Mitch Eggers is Chief Scientist and Jon Puleston is Vice President, innovation, at GMI

Check out ESOMAR’s new 28 Questions for Buyers of Online Samples. These questions aim to raise awareness of the key issues in helping researchers consider whether an approach is fit for their purpose.

1 comment

Thanks Jon and Mitch for raising about this topic which is so often overlooked and has such a huge impact on cross country study results and comparability.

If anyone is interested in the topic, I strongly recommend you dowload Jon and Mitch’ paper from the ESOMAR Congress ‘Dimensions of Online Survey Data Quality’. Really good and thorough.