James Rohde

As the strategic needs of your brand expand, one of the first areas that begin to feel a bit small is the scope of your brand research. When your brand research is no longer influencing your brand strategy and/or not reflecting the brand’s evolution, it is time for a more in-depth brand tracking tool.

The essence of this tool is rooted in connecting multiple layers of brand information at the respondent level:

1. Consumer Reported Positioning (What do consumers think of you)

2. Consumer Engagement (How do consumers interact with you)

3. Consumer Purchasing Data (How and to what extent are consumers using you)

In the end we are going to be connecting your brand experience to the brand perceptions, and then to the sales data. This helps us answer:

1. How much is a point of satisfaction worth?

2. Where else are your best customers shopping?

3. How much do they like your competitors? Why?

4. Does your brand need to build different levels of trust to convert within your different product categories?

5. Where in the engagement process do consumers start to commit to your brand with their purchases?

For now, we will assume that you have or can gain access to the transaction data.

Clearly areas one and two are not conceptually new, but in order for them to work well together they need to be laid out very purposefully so that result works well with the transaction data that will be layered on at the end.

Consumer Reported Positioning

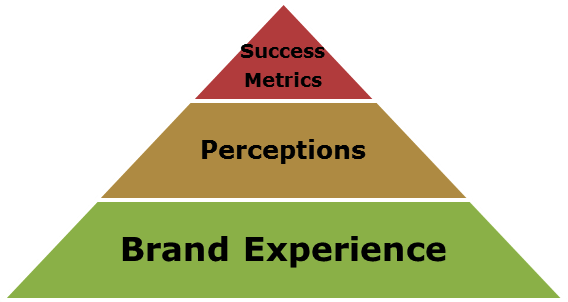

The consumer reported positioning is made up of many of the same metrics that we find in most brand satisfaction studies. Though to keep the questions effective we have to be a bit more efficient and restrained in the design phase. The questions included should fit into one and only one of three buckets:

A. Brand Experiences

B. Brand Perceptions

C. Success Metrics

Brand Experiences

Brand Experiences define what the respondent did or did not do while interacting with you or one of your competitors. These are questions that help determine how prevalent a certain activity is when using your brand. They have the side-effect of working well within skip-logic too so you are able to determine if the respondent is eligible for different questions within your survey.

For example, did they try asking an associate for help while shopping? Did customers check competitor prices while shopping your store?

These questions can balloon quickly so it’s critical that each experience question has a purpose. Why will that particular experience impact the brand – or if not the brand directly, the analysis?

An experience question will usually only impact the brand directly when we need to understand how often a certain activity is taking place. One example that we all hear a lot about is checking prices on Amazon before completing an in-store purchase.

Brand Perceptions

Brand perceptions are the questions that determine how and what consumers think about you vs. your competition. This is where we’ll find the ‘Brand is right for me’, ‘Knowledgeable staff’, and ‘Thought leader in the industry’ attributes.

The trick here is to make sure that the perceptions you choose to include are supported by the experiences that you are asking about. Let’s take ‘Has a knowledgeable staff’ as an example. There is an argument to make as to whether respondents should be asked the question if they have not interacted with a staff member but regardless, for a clear analysis we need to know how many and which respondents actually asked your staff a question.

If the perception that your staff is knowledgeable is lower than the competition, it is going to be necessary to determine if this is stemming directly from interactions with the staff or is a halo effect from something else.

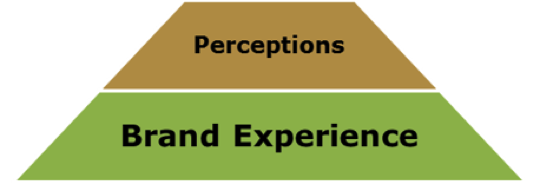

The brand experiences and brand perceptions are meant to complement each other so that every experience is impacting an intended perception and each perception is supported by at least one experience. There are usually more experience questions than perceptions and it often makes sense for one perception to be supported by multiple brand experiences.

Success Metrics

The success metrics are pretty straight forward. This is where we will add Overall satisfaction, Likelihood to recommend, etc. However, one that is all too often missing is the brand mission statement. Satisfaction, recommendation, and likelihood to use again are obviously important but this is the place where you can see how you are delivering on whatever mission your brand has tasked itself to accomplish.

When this part of the design is completed you should have success metrics that articulate how often you are winning the hearts and/or minds of your customers (success metrics), supported by what they think of you (brand perceptions), further supported by why they think of you that way (brand experiences). For the sake of explanation these question categories are grouped together but this is not meant to imply that these questions should be asked consecutively in your survey. Best practices regarding survey flow should be applied.

This one portion of the tool can take quite a bit of discussion but it is just one piece of the puzzle.

Consumer Engagement

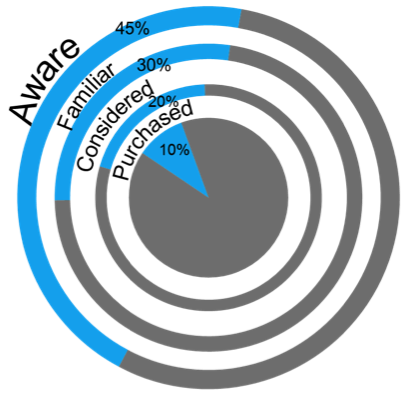

Consumer engagement can be measured in all sorts of ways; each with strengths in some areas and weaknesses in others. At this point we believe the best way to understand if and how consumers feel connected to a brand is through the brand funnel. The point of the funnel in this instance is not a path to purchase but a measurement of advantage or disadvantage in the marketplace.

Different industries can influence the ideal set-up but for the sake of illustration, we’ll keep the funnel relatively basic.

A. Awareness

B. Familiarity

C. Consideration

D. Purchase

Awareness

It is nearly always appropriate to have both unaided and aided awareness included in the survey. These responses need to not just be tallied but coded so that they can be reincorporated into the data set at the respondent level. Unaided awareness is an exceedingly more valuable equity than aided awareness; knowing how these people ended up with your brand as their top-of-mind is going to impact strategy.

Familiarity / Consideration

Either but ideally both of these funnel metrics could have levels to indicate low vs. high familiarity or consideration. These levels will represent the devilish details that can really bring light to your findings.

That said, as a whole this section level of the funnel can be harder to deal with than most people give it credit for:

1. Are people familiar with the brand because they considered it?

2. Did people consider the brand because they were familiar?

This is where we have to invoke our initial disclaimer at the start of this section. The funnel is not purposed to be a path to purchase for the individual. Besides, the critical interaction has very little to do with time-traveling; all that matters is how many people that are familiar, decide NOT to consider. Understanding that ratio for your brand vs. the competition is a great foundation for understanding the broader marketplace.

Understanding why they did not consider matters too but that will be covered as you bring section 1 into your analysis.

Purchase

Note that in the illustration below, consideration and purchase are labeled in the past tense. This is intentional since we like to separate our purchase funnel from our loyalty funnel (different topic). For our immediate purpose, we want to keep the funnel focused on past behavior instead of intent of future actions.

That is to say, we know and appreciate a brand funnel that ends with loyalty. We suggest that it be included in this tool and in the analysis but for this exercise we are focusing on only the areas of the funnel that reflect past behavior.

Intermission

If you do not have access to the transactional data in any way, simply covering these first two sections in a single study will allow for a solid brand study that connects perceptions with engagement. More importantly you will see some cause and effect as you measure the impact of your evolving marketing efforts. Understanding how and when you are impacting reach, perception, and engagement is very powerful especially when you can see how your competition is affected.

Consumer Purchasing Data

Tying your respondents to your transactional data offers substantial power to what we have already laid out. Specifically, this is where we connect brand perceptions and engagement with dollars and action. Remember our brand experience question about interacting with the staff – this is where we see how much more is a positive staff interaction is worth.

The sampling methodology is the critical first step to making this work. If you just try and field your research among your in-house panel, or send out a link among your loyalty members, you will be skewing the information. For one, brand research should be blind – respondents should not know who is asking them to take the survey before the rate the brands. Second, but maybe less clear, is that the people on your in-house list are going to be demographically dispersed in a way that differs from a census based distribution provided by your panel company.

This means that you have to make the effort to match your outside respondents with their transaction information. A loyalty program number is a really nice way to do that since it avoids issues with collecting contact information from panel company respondents.

Once the matching is complete and transactions have been merged with your survey data, the limitation on the analysis is equal only to the limitation on your sample. In our experience match rates can vary between 40-70%.

Taking It One Step Further

Continuous fielding is not required but is a strong preference. The ability to capture unduplicated respondents throughout the year ensures that your measurements reflect the brand and account seasonal skews or short-term campaigns. This is especially true for competitors when you may not have real-time visibility into what campaigns are being run.

Internally, consider dashboards for your Key Performance Indicators. All researchers cringe a bit when data goes out without full analysis but for your funnel metrics, satisfaction scores, and other core measures; keeping these numbers up-to-date expands the useful life of your results beyond just 1 or 2 times a year. Due to sample, we like keeping these to Monthly or Quarterly updates.

That said, very often a basic study does the job very well and is more often under-analysed than under-performing.

James Rohde is Research Supervisor at MARC USA