Gina Pingitore

Let’s face it: the use of social media is more popular than ever before. In fact, recent estimates indicate that on any given day there are nearly 100 million users on Twitter alone posting messages that range from the inane to the insightful.

For market researchers, this vast amount of conversation offers a treasure trove of data to mine and insights to gather regarding the real-time needs of consumers.

Although offering new opportunities, users of social media (SM) data know that it is noisier and less precise than that generated by traditional market research methods. Despite improvements in text analytic tools, it is not always easy to codify what a word means or to know its true reference. For example, “sick” in some circles is a good thing, and when someone is commenting about “Hilton,” it can be difficult to know if the reference is to the brand or to a person. When one considers the impact and effectiveness of company-sponsored social media marketing and “Twitter bombs,” the need to distinguish between real consumer dialogue and organized communications adds further concerns about the quality of SM data.

But do these issues actually impact the quality of SM data? More importantly, if they do, what can be done to improve the quality and resulting interpretation of such data? To answer these questions, we compared the results of six analysts who were tasked with writing queries and extracting data for specific topics using NetBase’s Theme Manager tool.

Data quality fundamentals

Whether researchers measure the distance between two stars, an athlete’s aerobic capacity, a company’s level of customer satisfaction or a brand’s consumer-generated sentiment, the results should include two fundamental measurement criteria: reliability and validity. In fact, it cannot be overemphasised how important it is to achieve a reasonable level of reliability and validity. For any measure to lack reliability and validity means that the results are, at best, distorted and, at worst, of no value.

Reliability broadly refers to the consistency (or reproducibility) of the results. While there are many different types of reliability, inter-analyst agreement is arguably the most critical to SM data. In practical terms, inter-analyst agreement is the degree to which the findings (volume of sound bites, sentiment, etc.) are consistent across different analysts who conduct queries on the same topic, using the same tools, and across the same time period and sites/sources. This is an important consideration and raises a question: if different analysts get different results, how can SM users have confidence that the results are real and not a function of the analyst who generated the data?

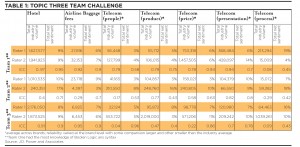

To evaluate inter-analyst reliability, we assembled three different teams, each including two analysts. Teams varied in the degree of knowledge of the NetBase Theme Manager tool as well as in their expertise in developing Boolean logic and syntax. Theme Manager was chosen because it allows analysts to develop complex Boolean logic searches to extract relevant verbatims from its social media database. Each team was then given three different topics that varied in degree of complexity. Topic one was the simplest: assessing what hotel guests say about nine different upscale hotels. Topic two was slightly more complex: assessing what travelers say about baggage fees across 12 airlines. Topic three was the most complex: assessing what cell phone users say about the products, people, processes, presentation and prices of the top six telecom providers located in the United States and Canada.

Teams were instructed to extract total volumes and sentiment (numbers positive, negative, and unknown) across a predefined time period (July 2010 to August 2011). To further reduce extraneous variation, analysts were also instructed to spend no more than 4 hours per topic. Inter-analyst reliability was then assessed by comparing intra-class correlation coefficients (ICCs) both among and between teams. An ICC is commonly used to assess the consistency or agreement between two or more individuals on the outcomes of interest — in this case, volume and sentiment. The values assigned were like those used for other correlation coefficients, ranging from a minimum 0.0 value (no agreement) to 1.0 maximum value (complete agreement).

Examination of the agreement levels for both total volume and net sentiment shows wide variation in the results obtained among analysts. Analysts in Team One produced the most consistent results, particularly for total volume, with ICC values ranging in the high 0.9s for most topics. In contrast, analysts in the other two teams were less consistent in the results they obtained for both volume and sentiment.

The implication of these findings is that results from SM analyses can be dependent upon the analyst conducting the assessment. Limited reliability means that there is a real potential that the findings would be very different if the analysis was conducted by another researcher. Therefore, users of social media data must have in place solid processes and procedures to maximise consistency in the results.

They might agree, but are they right?

Validity refers to the accuracy of an assessment — whether or not it measures what it’s supposed to measure. Even if a test is reliable, it may not provide a valid measure. Let’s imagine that a bathroom scale consistently tells you that you weigh 110 pounds. The scale has good reliability, but it might be inaccurate (low validity) if you really weigh 150!

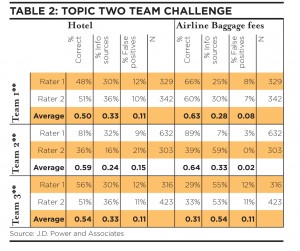

To evaluate the validity of social media findings, a random sample of sound bites was extracted from each analyst’s results from the hotel and airline baggage fee topics described above. These sound bites were then evaluated by a separate team, which assessed each post on two criteria: the percent of false positives (eg, including sound bites referencing a person named Hilton rather than the brand) and the percent of information sources (the inclusion of news reports or company-sponsored marketing). An estimate of the overall percent correct was then calculated by taking into account the criteria reviewed.

As can be seen in Table 2, the validity of results also varied across teams and analysts, with some analysts obtaining more accurate results than others. As with the findings for reliability, these results should cause users of SM data to ask whether their data is reliable and valid.

We have identified six best practices to help ensure that findings from social media are both reliable and valid.

1. Be specific in defining your topic. Clearly defining the focus of your topic and determining whether it is restricted to personal narratives or also includes informational and news posts is a critical first step in obtaining reliable and valid SM data. As can be seen in Table 2, the percentage of sound bites that came from information sources differed notably among analysts. It accounts for a much greater variation in the total volume of sound bites obtained across analysts than the difference in percentage of false positives.

2. Establish the right balance between precision and coverage. As a general rule, the more exclusions there are in a query, the lower the rate of false positives. However, adding exclusions also results in the loss of valid sound bites. Therefore, it is important to establish precision rates before query development. As a guide, precision levels around 80% are acceptable when the purpose is directional in nature, but higher levels (90%) should be used when the purpose is to benchmark or compare different brands.

3. Avoid sentiment expressions in queries. In an attempt to get consumer opinions, some analysts developed lengthy queries that included specific sentiment expressions (ie, “I love,” “I hate”). The inclusion of these expressions, however, dramatically impacted both the total volume of sound bites and the percent net sentiment (% positive minus % negative). Therefore, sentiment expressions should be avoided, particularly when the purpose of the study is to objectively compare the volume of consumer sentiment.

4. Employ well-trained analysts. Although most social media tools are user-friendly, expertise and experience in Boolean logic and query development is essential in obtaining quality SM data. Our findings show that the team with the highest level of agreement had more technical experience in developing and executing Boolean logic and syntax.

5. Utilise separate QA teams. An experienced QA team is invaluable in obtaining reliable and valid results. Our best practice is to employ a dedicated QA team that randomly extracts a proportionate number of verbatims from all queries and evaluates their accuracy (eg, “I enjoyed my stay at the Hilton”) or false positive (eg, “Paris Hilton to face drug charges”) as well as coverage.

6. Ensure proper feedback. It is essential that analysts receive detailed feedback from the QA team and revise their queries based on the errors found. This process must be repeated until the pre-defined precision level is achieved (in our case, 90%). As a guide, our best practice is to conduct at least two rounds of QA for simple topics and additional rounds for those that are more complex. Additionally, rather than attempting to catch all errors by sampling a large number of verbatims in the first wave, start by utilizing QA on smaller samples (50-100 verbatims) to find the initial critical errors.

Conclusion

Our findings show that although SM tools allow analysts to easily extract this type of data, the extracted data may not be reliable or valid. This paper has identified six best practices that should be used to ensure high-quality data and serve as the starting point for continuing discussions in this area.

Gina Pingitore, Ph.D. is Chief Research Officer at J.D. Power and Associates