By Michalis A. Michael

This is the first of a series of articles on Research World Connect about social media listening and analytics as a new but already integral part of market research. In this article and subsequent ones, we will attempt to shed some light on how social media listening and analytics should be done in order to be useful for market research practitioners. Case studies will be shared explaining how social listening has added value to the market research process and results by generating actionable customer insights.

Traditionally, market research has revolved around asking questions. Other less ubiquitous data collection methods considered part of the MR toolkit in the traditional sense, include retail audits, audience measurement, and desk research. With the proliferation of social media which started in the mid-2000’s, social listening and analytics slowly entered the scene. The pace of social listening adoption by market research professionals has been frustratingly slow; there are some good reasons behind that, which we’ll attempt to explain in this article.

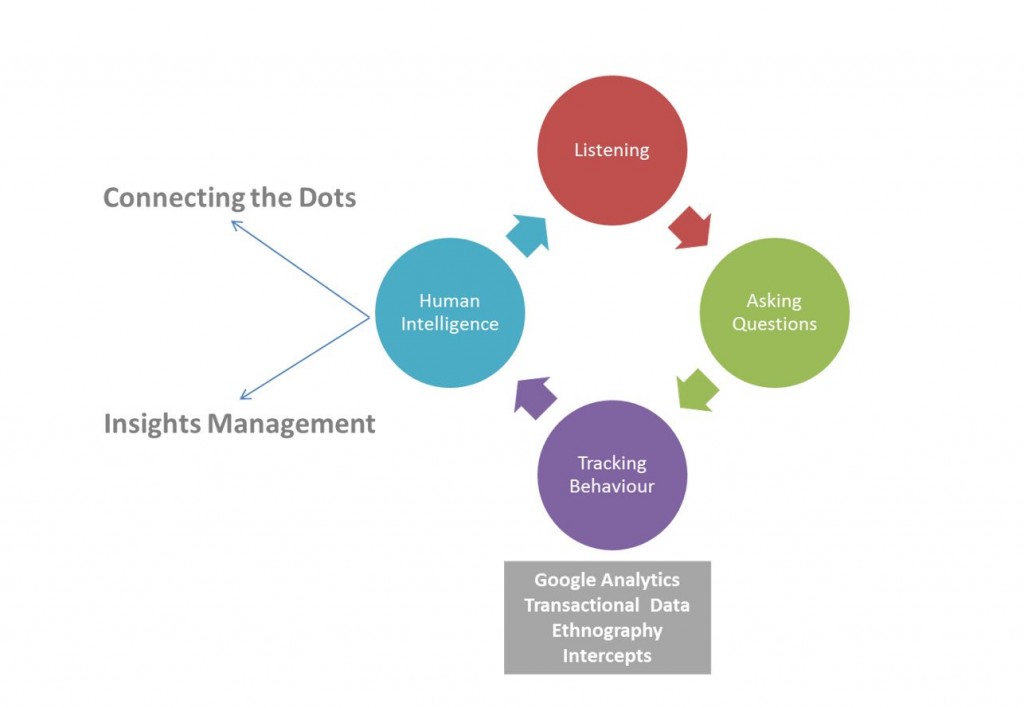

Besides asking questions and listening, tracking behaviour is the third method in what can be seen as a spectrum of data collection possibilities. Indeed, next generation market research comprises 3 pillars of data collection:

- Asking

- “Listening” (metaphorically speaking)

- Tracking Behaviour

Having set the stage comprehensively on the data collection side of things, let us consider what happens after we access the relevant data. It has to be said that market research buyers do not need more data; they just need actionable insights and a way to manage them within the business.

How do we do that? By integrating multiple data sources from all 3 pillars of data collection and connecting the dots to synthesize otherwise unattainable insights. We believe that in the near future, digital platforms will be adopted by end clients to serve as market research hubs (Figure1. illustration) that connect survey and focus group data (asking questions), to social listening feeds and behavioural data.

Figure 1: Digital Platforms as Market Research Hubs (©2016 DigitalMR®)

Why has the adoption pace for social listening been slow so far?

There are more than 400 social media monitoring tools out there; almost all of them have been developed by tech companies targeting Public Relations (PR) professionals. Sentiment and semantic accuracy never come up in a discussion with a PR Manager who is trying to buy access to a social media monitoring tool in order to manage online reputation; this is why, when market research managers started checking out the social media monitoring tools their PR colleagues were using, they were very disappointed with the data accuracy. Their conclusion was: “this is not an appropriate tool for market research”; trust was shattered and thus market research budgets were seldom allocated for social listening and analytics. Now that a few solutions are indeed available for market research purposes, with proof that they can deliver the required precision to be useful, it is tough work to persuade the market research budget holders to shift some money to social listening.

However, we are seeing a slow but steady uptake from traditional market research agencies and end clients; one of these end clients, Tom Emmers, Heineken Senior Director Global CMI said: “I believe that today, given the rapid advancement of capabilities, we should only resort to surveys if we cannot find the answer in social listening or behavioural tracking data.”

How can we integrate social listening in current market research programmes?

The best way to introduce social listening into your organisation – if you haven’t already – is by defining the specifications of a pilot. The purpose of the pilot is to develop trust within the organisation; trust that social listening is a credible market research tool under certain circumstances (see point 4 below about data accuracy). This pilot could be about enhancing a consumer tracking study with listening KPIs, or as simple as evaluating a digital campaign. Digital campaigns are currently evaluated by looking at engagement metrics but social listening can help us understand why people choose to share a video clip or not, why they express a positive sentiment towards the campaign/brand or not…

Is the sample representative?

Sample? What sample? There is no sample! In the world of social listening we do not need to limit ourselves to samples; we can access all the posts about a brand or a subject. This is what we would call: Census Data. Also we should keep in mind that not only those who post matter but also those who read. How many people could be impacted by reading these posts and which countries are they in? There is a lot more to this subject that will be addressed in subsequent articles.

How do we measure accuracy?

Since we do not deal with samples in social listening, we cannot of course talk about confidence levels and standard errors. Instead, the main measures of accuracy for social listening analytics are:

- Precision (the fraction of retrieved instances that are relevant)

- Recall (the fraction of relevant instances that are retrieved)

- F1=2x[(precisionXrecall)/precision+recall)]

Precision and recall can be used to measure both sentiment and semantic accuracy. By “semantic accuracy”, we mean the accuracy of annotating a post with topics, sub-topics, and attributes. Acceptable (and realistic) levels of precision are over 80% for sentiment and over 85% for semantics at hierarchy 1 level (for topic annotation a hierarchical taxonomy with multiple hierarchies should be used).

In the following articles over the next few weeks, data from a listening study about the market research industry will be shared. The ranking below is based on English posts harvested from July 1st-December 31st 2015 from Twitter, blogs, boards, videos, and news sources. The noise elimination process has not been completed but I could not resist sharing a sneak-peak; even if the final numbers will be slightly different. Stay tuned!

| # | Company name | # of posts |

| 1 | YouGov | 130,771 |

| 2 | Ipsos | 112,816 |

| 3 | Kantar | 74,734 |

| 4 | Comscore | 68,222 |

| 5 | Gfk | 49,804 |

| 6 | Brandwatch | 33,361 |

| 7 | Nielsen | 17,746 |

| 8 | Sysomos | 12,892 |

| 9 | Vision Critical | 11,135 |

| 10 | Crimson Hexagon | 6,134 |

| 11 | Millward Brown | 6,088 |

| 12 | Dunnhumby | 5,715 |

| 13 | Maritz CX | 3,805 |

| 14 | Confirmit | 3,560 |

| 15 | Lieberman research world | 3,197 |

| 16 | Melwater buzz | 2,652 |

| 17 | TNS | 2,642 |

| 18 | Radian6 | 2,613 |

| 19 | NPD | 2,364 |

| 20 | Dub | 1,433 |

| 21 | DigitalMR | 1,370 |

| 22 | IRI | 1,006 |

| 23 | Brain Juicer | 987 |

| 24 | Focusvision | 615 |

| 25 | Macromill | 444 |

By Michalis A. Michael, DigitalMR, Connect with Michalis via @DigitalMR, his personal @DigitalMR_CEO or via email mmichael@digital-mr.com

2 comments

Jeffrey thank you for your comment. When I refer to Census Data I did not mean to conduct a census of everything that is on social media. I meant that we can harvest everything that is available on the brands we are interested in, we do not need to limit ourselves to a sample of data when we have access to the available universe. You can use an analogy from retail audits: even though Nielsen and IRI did not have access to Wal-Mart scanning data they still referred to what they were providing to their clients as Census Data.

I have not claimed that discussions of social media are representative of anything other than the people who posted them. I have however tried to make a strong point about the focus having to also be on “who reads” and not only “who writes”.

I agree with you about discovering more when the sources disagree but I do not know where you based your assumption that listening is overstated. By what measure is it overstated?

thank you for sharing it further, it is depates like this that will make research practitioners trust this new discipline the same way they trust surveys or even more :).

You can’t conduct a census of social media. Many people use Facebook and even Twitter privacy settings to restrict who can see their posts. You can conduct a census of public social media posts, but no one can analyze how representative that is of dark media posts.

Discussions on social media are not always representative of wider opinion. For instance, from our social media analysis, the rules of the NFL were a major cause of dissatisfaction, but the minority that felt this way was just exceptionally vocal. Those surveyed placed less emphasis on this.

I do like your 3 pillars of data collection, and will refer back to this, but I think you overstate the case for listening. I think where the sources disagree is what will make triangulation so powerful.