We see that automation has a huge impact on the future of the workplace, what is PwC’s activity in this area?

We believe that automation and artificial intelligence (AI) will have a profound impact on our organisation which employs over 270,000 employees globally. Our people are highly educated professionals dealing with huge amounts of big data, therefore we want to ensure that we are in control of the disruptive forces of these technologies on our own terms.

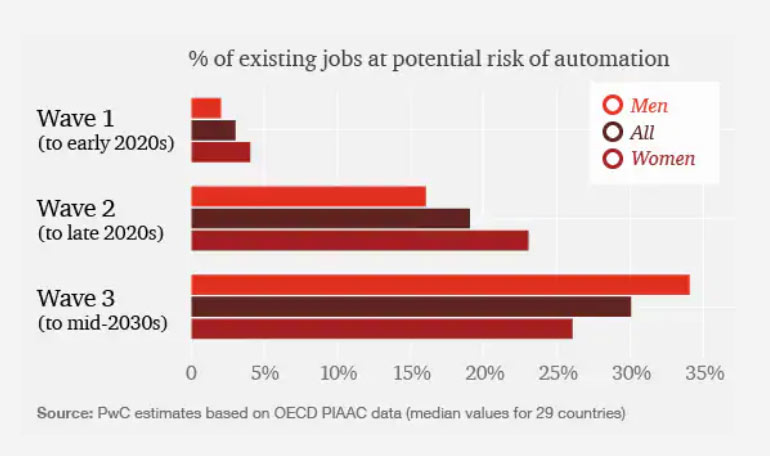

We also advise companies and governments who want to understand how this will impact them, so we have undertaken comprehensive analysis and research. We used our team of economists and analysts to model the susceptibility of jobs to be automated by the effects of tech such as AI. The headline figure suggests that up to 30% of existing jobs could become highly susceptible to automation by the mid-2030s, with some variability across industry sectors, by education, gender and geography.

Another point here is the economic impact of AI through greater productivity and increased consumption side effects which our studies suggest could see an additional US$ 15.7 trillion US of GDP growth by 2030. If we also look at the economic growth effect this in turn brings demand for more labor so our conclusion is that if organisations, governments and educational systems can embed the right processes of lifelong learning, the displacement effect of jobs removed from the system could be offset by the possibility of creating new jobs but there are a number of ifs and buts in this scenario to be able to achieve that.

Automation risks low for all education levels in short term, but less well educated workers much more vulnerable in the long run

PwC analysed in detail the tasks involved in over 200,000 existing jobs across 29 countries to assess what the potential for automation may be at various points over the next 20 years. PwC identify three waves of automation that might unfold over this period:

– Wave 1 (to early 2020s): algorithmic

– Wave 2 (to late 2020s): augmentation

– Wave 3 (to mid-2030s): autonomy

The research and insights sector will also be impacted, what are the key areas that will be affected and how quickly will this happen?

The opportunity posed by machine learning and AI could have a significant impact on this sector.

The first opportunity is to help professionals to discern far more useful patterns in big data, to remove some of the administrative burden and augment these professionals rather than replace them. The opportunities to create more subcategories of professionals within the sector are clear, but at the same time, some tasks will also be eliminated or reduced through the application of this tech.

Are there some concerns regarding decisions that can be made regarding AI (healthcare, insurance or travel) and acceptance, with issues arising about ethics and transparency?

There are a number of issues with regard to the growth of AI technology and particularly around the fact that the more powerful types are often facing an interpretability challenge to the extent that even some of those who configure this technology cannot necessarily explain how decisions are made exactly.

This becomes particularly an issue in situations with significant or profound consequences, often in highly regulated sectors like healthcare or financial services for example. It is critical that systems are transparent and allow organisations to inspect them. We need to have confidence in how decisions are made to mitigate the risks, avoid regulatory challenge and the unintended consequences that automated decisions could lead to.

There are also a number of ethical challenges with regard to technology such as automated facial recognition, so ethics experts will be needed to work alongside technology experts, to not only assess the immediate challenges that come from the tech but also to model the secondary and tertiary downside risks. I think that this will be a critical type of role that will grow in prominence in the years to come, whether that’s in the research and insights sector or beyond, for example in the audit profession the healthcare sector amongst others.

Are you concerned that there is a huge under-representation of women and minorities in the AI and tech world generally which could lead to bias in the algorithms and decisions that might result. Can and how should this be addressed?

It is evidently clear that the workforce developing the tech is overwhelming white and male and we have already seen quite poor outcomes through technology that has not taken into account all parts of society. Diversity is a fundamental requirement for all organisations to address now, and we have to pay attention to not just gender and ethnicity balance in the workforce but also social mobility and across all the different disabilities to work in these teams.

One of the big challenges we see was highlighted by a study we launched two years ago where we surveyed young girls and their preconceptions about technology careers. The statistics were eye opening. A very, very small proportion of them had considered technology as a career first choice and only a tiny minority could name a leading female role model in the technology industry. When balanced against the boys who were considering a career in technology, there is a clear divide there.

There are already some fantastic initiatives underway to encourage women into the profession and change the gender ratio working in tech roles in the UK. For instance we have launched the TechSheCan Charter that has attracted over 100 organisations as signatories to adapt public policy, champion role models, raise visibility and gather together parallel initiatives and best practices to bring them under one banner in order to positively influence and reach young girls at an early stage before their minds are made up that this could be a career for them.

We already see a huge imbalance not only between individuals who have tech skills and those who do not, but also between town and country and regional and age cohorts. How can the benefits be more equitably spread?

Just to speak to the economic study that I referenced earlier on, and the overall US$ 15.7 trillion potential growth contribution to the global economy by 2030 from AI, it showed there was a very clear geographical divide in that data. The greatest economic gains from AI will be in China (26% boost to GDP by 2030) and North America (additional 14.5% GDP in that time horizon), with Europe at about 10-12% and a lot of other countries much less, so there is already the potential to exacerbate inequality in growth.

When you look at this on a national perspective, a number of governments have launched AI strategies and most of the ones I have seen so far, acknowledge the fact that the advantages of AI have to be positively spread across the breadth of society or you risk a potential backlash against the tech if this leads to a concentration of wealth in certain parts of your society.

In the UK for example there was the launch of an industrial strategy last year in which AI was announced as a grand challenge, with a billion pounds of funding dedicated to it. Within that there were a number of policy announcements focusing on regions to stimulate innovation and entrepreneurship and to start growth and spread innovation.

Would you say that research companies and their clients have their strategies in place for AI regarding staff and education?

There is already a very high demand for AI practitioners and another report suggested that in the UK for example in the last five years there has been a 231% increase in demand for these skills. These skills are going to continue to be in high demand in years to come and companies have to think about how they are attracting, developing and retaining this expertise.

They also need to consider at a much higher level the very essence and purpose of their organisation. AI has the potential to deal with some very intractable problems, for instance to improve waste and inefficiency and improve our lot generally, but if not considered holistically, there could be downside risk so companies have to consider the role they play in the communities they serve and think how they can spread the benefits of the tech. There is a responsibility to consider this as more than just a tech investment.

Rob McCargow is Director of AI at PwC. He is an advisory board member of the UK’s All-Party Parliamentary Group on AI, an adviser to The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, a TEDx speaker, and a Fellow of The RSA.